The human brain can quickly recognize patterns and move from details to the “bigger picture”. Let’s think about a pointillist painting. If you look at the painting very closely, you will see many distinct colored dots. As soon as you move backward, you will be able to make sense of the whole representation.

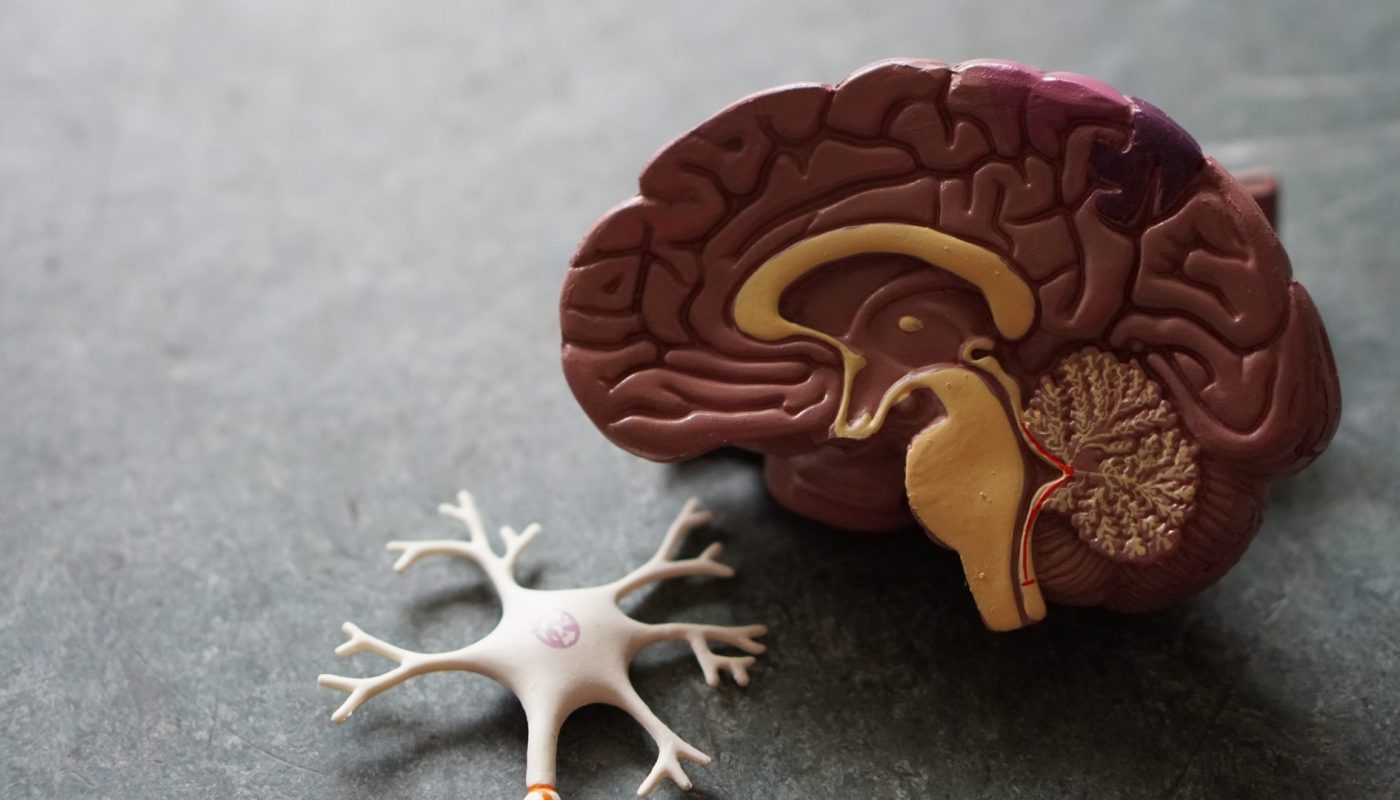

Human brain image recognition works exactly in the same way. Through the retina, the pixel-like inputs coming from a picture are transferred into different layers and converted into visual perception. Finally, a picture interpretation is given in the visual cortex of the brain. All in less than 200-millisecond viewing duration. Impressive, right?!

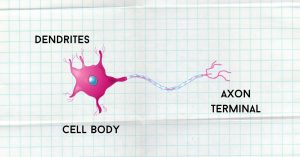

How exactly the brain manages to accomplish this challenging task is still not entirely clear. A recent study has shown that the inputs coming from the retina are going through a selection process. During this journey, different weights are attributed according to the level of importance of the picture’s features. What is certain is that our neurons can quickly transform information from one level of representation to another, with higher levels corresponding to more abstract and sophisticated concepts. A similar process also occurs in machines. Indeed, the whole concept of AI and Deep Learning is inspired by the human brain, more precisely by the structure of the neurons. Oh, the neurons! If you have been busy daydreaming or making paper airplanes during your biological class, don’t worry. I will make a short recap for you. The human brain has billions and billions of interconnected neurons. These brain cells transmit information from different areas of the brain by using electrical impulses and chemical signals. Neurons have dendrites that receive signals (or inputs) from other neurons. These signals are then analyzed in the cell body and the output is sent out to the next neuron through the axon terminals.

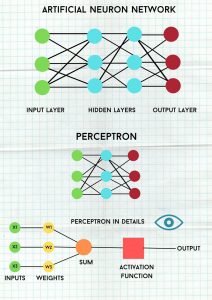

Deep Learning uses neural networks to identify categories and generate outputs (if you don’t know what I am talking about, have a look at my previous blog post). Each layer analyzes a distinct set of features based on the previous output. The more layers you have, the better your machine can recognize complex and sophisticated features. This “deep” structure allows the machine to handle data with billions of different features.

But now let’s explore the Artificial Neuron Network structure. The fundamental unit of this network is called Perceptron. Multi-layer perceptrons form an Artificial Neuron Network.

Exactly as a neuron in the human brain, the perceptron receives inputs and through a multilayer structure process them to obtain outputs. It is like a decision function that gives a binary output. Let’s clarify it with a simplified example. Suppose you ask the machine to choose a coffee bar where you can study. The following inputs will be considered:

- X1 = comfortable tables and chairs

- X2 = internet access

- X3 = white walls

The machine adds weights, namely numbers expressing the importance of the respective inputs, to determine whether or not the inputs are important to find the perfect coffee bar. A weight of 4 (important) is attributed to input X1 because comfortable chairs and tables are necessary to study for a long time; a weight of 6 (very important) is given to input X2 because an Internet connection is essential when studying. A negative weight of -2 (not important at all) is attributed to input X3 because the colors of the walls do not influence study conditions (unless you are a very distracted student). After having weighed and summed the inputs, the activation function gives an output that is rather a 0 or a 1. This output is determined by whether these weighed and summed inputs are bigger or smaller than a selected threshold. If the final score is above a certain level, then you will choose that coffee bar as a place to study. If the output is wrong (maybe the decision of the bar has been taken according to the walls’ color) it is sent back, and the neurons have to adjust the weights to calculate a new output. This process is called “Perceptron Learning” and aims at continuously changing weights until the error is closed as much as possible to zero.

Ok, now you should have figured out how the logic process of machines works.

But how can we build intelligent machines? How can we transfer knowledge into computers? I will tell you more in the next blog posts! For the moment, you can support machine learning research by helping Google neural network to recognize doodling…have fun!

P.S. Feel free to leave a comment if you have questions or want to know more about a particular area of Deep Learning! 😀

Photo by Robina Weermeijer on Unsplash

References:

LinkedIn Course Artificial Intelligence Foundations: Neural Networks

A Beginner’s Guide to Neural Networks and Deep Learning

A 2019 Beginner’s Guide to Deep Learning: Part 1

How the Brain Processes Images

Thank you! This article is very useful for a paper about machine learning that I am writing. It is very clear and written in a simple way.

Hi Agnese, thank you for your comment. I am glad it helped you. Feel free to ask for specific areas of Deep Learning you’d like to know about. I will include them in my next blog posts 🙂

Wow!!! Machines and technology never fail to amaze me. Super interesting article, can’t wait to read more!

Thank you, Veronica! Stay tuned for the next posts, and do not hesitate to ask if you need clarifications or more examples 🙂

Thanks for the brain wash! Very interesting topic and cool post!

Happy you liked it!:) and this is just the basic/non-engineering version 🙂