In this blog post, we will explore how generative AI has transformed the way prototypes are created, and the benefits that it brings to the development process. More specifically, we will highlight tools that have led to an increase in speed and democratization of rapid prototyping and compare them to pre-AI workflows.

Traditionally, rapid prototyping („RP“) describes the process of designing and building a basic version of software quickly, in order to test and refine its functionality. Despite the name, this can be time-consuming, as developers and designers have to go through several steps. Put simply, the following stages can be considered part of RP:

- Product/feature ideation

- Low- and high-fidelity mockup development

- Programming

Let’s look at how generative AI supports and speeds up this process and even enables novices to tip their toes in the app development space.

Product/Feature Ideation

Traditional Approach

Then and now, the starting point should be a problem, for which to find a solution. For the purpose of this post, we assume that we have identified the problem that authorities in Zurich should be informed of illegal garbage disposal and we’d like to develop an app to resolve that issue. Historically, to identify how to solve the problem, work would be started on lengthy brainstorming sessions, internet searches, competition analysis, interviews and other research.

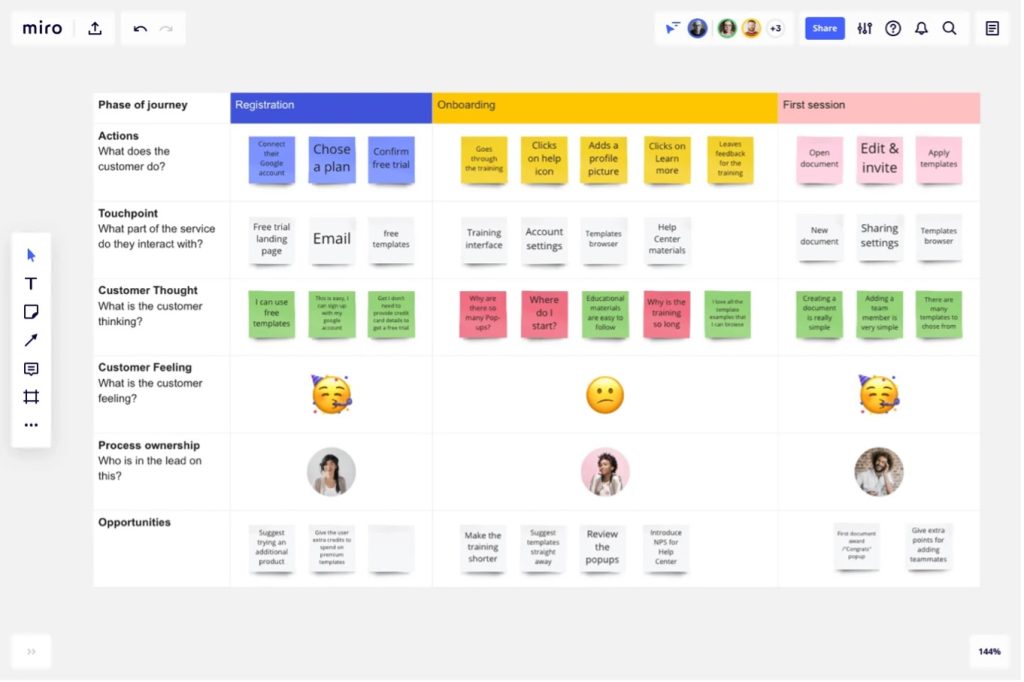

The results would then be consolidated in for example a miro board or another tool for online collaboration and brainstorming as seen in Figure 1.

Impact of Generative AI

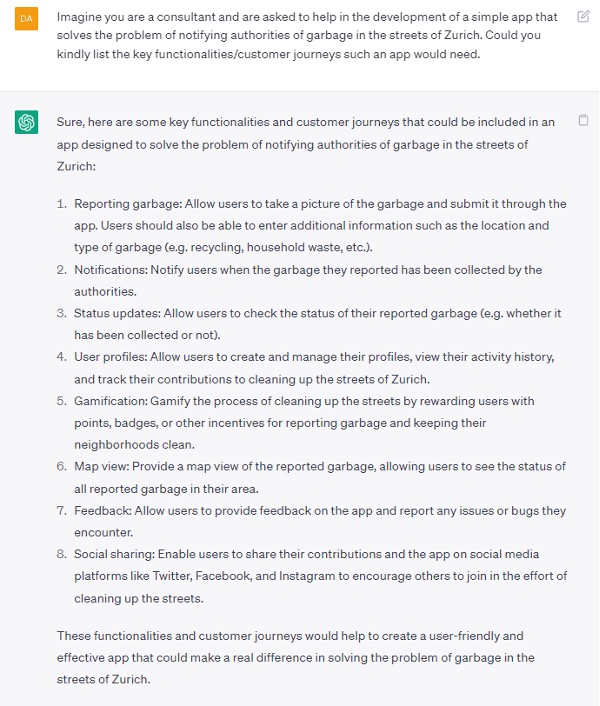

Today, a simple query with ChatGPT provides a good idea of key functionalities this app would need in less than a minute. While it is highly likely that the proposed functionalities of the query to Chat GPT on the problem at hand will need to be refined down the line, they provide a great starting point for developing a prototype of the app (see Figure 2).

Low- and high-fidelity mockup development

Traditional Approach

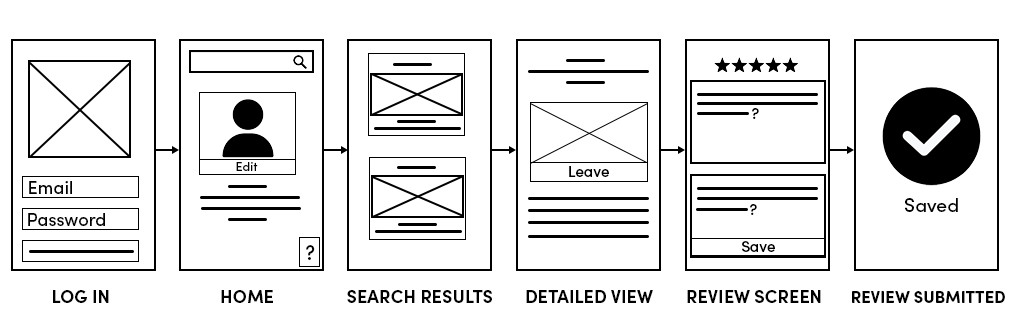

Once we have identified the key functionalities we wish to cover, we create so called low-fidelity mockups, i.e. line drawings called wireframes that roughly illustrate the idea / functionality aspired. While wireframes can be developed using pen, paper and scissors, dedicated web based tools such as balsamiq have positioned themselves to provide easy access and usability even without great drawing skills. However, they still require a steep learning curve and a certain understanding of user experience design to be put to good use.

These low-fidelity mockups (see Figure 3) can then be used to discuss and test an idea with third parties or compare multiple versions to validate key assumptions. Moreover, they can serve as the basis to create flows the user would walk through (to learn more about low-/ high-fidelity prototyping see here).

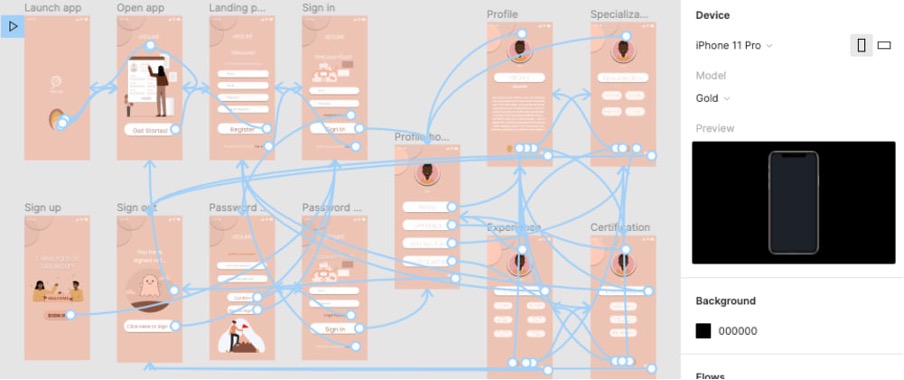

After the basic idea has been validated, a designer would be in charge of translating these rough illustrations into high-fidelity screens that resemble the real product. This requires sound understanding of interface design and user experience as well as experience with tools such as figma, likely the most widely used tool to create high-fidelity screens. Here, each screen is meticulously crafted aligned and linked to others to simulate an actual app interaction (see Figure 4).

Impact of Generative AI

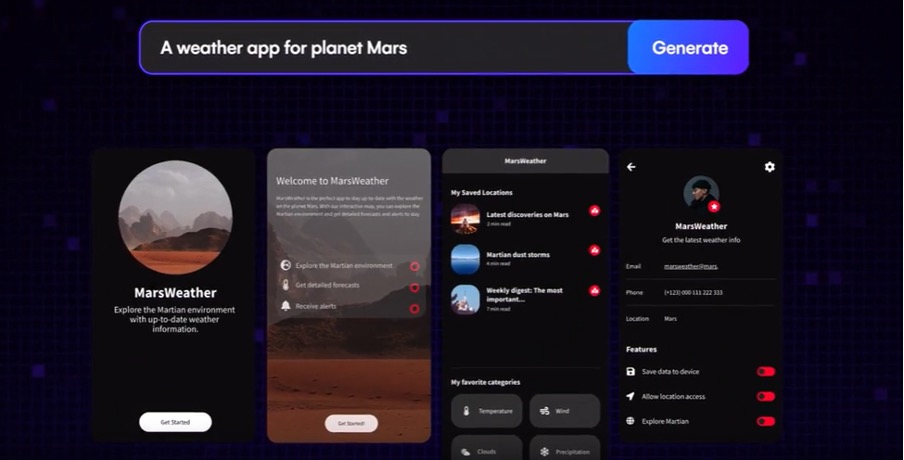

While there are several tools that allow the creation of images from text input, e.g., midjourney or DALL·E 2, the creation of user interfaces is still very early stage at the time of writing. However, Uizard’s autodesigner (not yet publicly available) promises to generate entire user interfaces based on a single sentence as input (see Figure 5). While I could not test the functionality, I believe that in order to generate meaningful (as opposed to only visually pleasing output) output, more input will need to be provided. I expect that within a few years’ time, AI-based user interface designers will use inputs such as the one provided by Chat GPT (see Figure 2) to generate accurate user interfaces matching the functionalities required.

Programming

With the advent of generative AI, developers can now use automated tools to create prototypes quickly and efficiently. While not yet very sophisticated, they provide a glimpse into what will come. A next blog post might look at this in more detail.